Figure 6.1: Sorting algorithm visualized in animated color

All of my implementation for sorting algorithms is available on Github. Below are the guidance to approach different sorting algorithms and practice problems:

I) IMPLEMENTATION BY DEFINITION:

- Merge sort:

Let’s break down the algorithm for Merge sort:

Suppose: Given an array, perform Merge sort on the list of numbers inside the array bounded by a given starting index and ending index.

– First, we check if the ending index is greater than the starting index. If it is not, the list has only one element and thus is already sorted. We only proceed if the ending index is greater than the starting index.

– Next, we split the given list into half, and then we call the recursive function on both sides the list. Thinking recursively, we will basically break down the list into a bunch of lists that only has one number inside it.

– After the recursive splitting, we merge the two split lists together. Thinking recursively, after we break down the list into a bunch of one-number lists, those lists are basically sorted. When we perform merge operation, we will be merging two sorted lists together each time. We will keep merging until we obtained the complete sorted list.

- Insertion sort:

Insertion sort is relatively easier to implement compared to Merge sort. The algorithm is simple: at the beginning, we have an empty sorted list and a unsorted list given as input. Every cycle we find the minimum number in the current input list and insert it into the correct position inside the sorted list until we go through the entire list.

- Bubble sort:

Just like insertion sort, bubble sort is relative easy to implement. Its algorithm is also very straight forwards: We go through the list every cycle, checking whether two consecutive numbers are in reverse order so that we can swap them, until no more swaps are required.

- Quick sort:

Similar to Merge sort, Quick sort uses Divide and Conquer algorithm to break down the input list:

– Every cycle, pick an element as a pivot (I chose the left-most element in my code on Github)

– For each cycle: do swapping for the rest of the list so that all the elements with smaller or equal value to the pivot are on the left hand side, while elements with greater values are on the right hand side. After that, insert the pivot into the correct position.

- Heap sort:

First of all, as Binary heap is a Complete Binary Tree, it can be viewed as an array with the following rule: the parent node is placed at index i, the left child node is placed at 2*i + 1 and the right child node is placed at 2*i + 2. This rule applies for both Max Heap and Min Heap. Supposed we want to create a Max Heap, we will follow the steps bellow:

From step 2 to step 3, we need to compare the parent node with its left and right children nodes, if either of them has higher values, we should perform swap operation. This action will happen recursively until we completely cycled through the array, at which point we obtain the desired sorted list. - Counting sort:

The algorithm for counting sort can be divided into steps:

1) Create a new array called Count Array that: at each index, the value stored represent how many times the index value appears in the input array.

2) Adjust Count Array so that each element in the array is the sum of itself and the element before it.

3) Insert elements from the input array into the sorted array with the condition that their indices in the sorted array match the values stored in Count Array at the corresponding indices subtracted by one.

II) IMPLEMENTATION IN PRACTICE:

Question 1: Sort a binary array so that all 0s go before 1s.

For example:

Given: [0,1,1,0]

Output: [0,0,1,1]

Solution:

The easiest way would be count all 0 and 1, then create a new array with the calculated number of 0s and 1s in correct order:

Another approach would be using Quick sort with pivot equal 1:

In both cases, time complexities are O(n) and space complexities are O(1) (since the sorting happens in place).

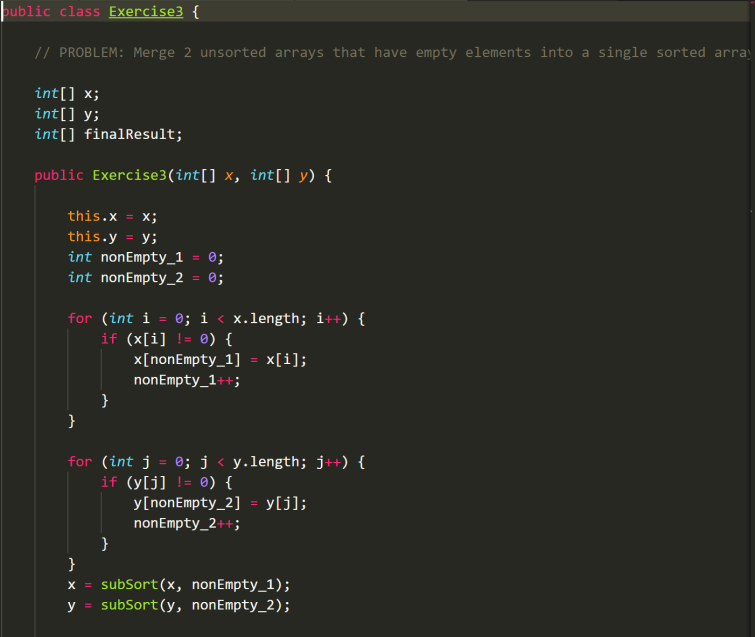

Question 2: Find the smallest missing element from the sorted array that has the logic: the value stored in an index is equal to the index itself, given time complexity equal O(log(n)).

For example:

Given: [0, 1, 2, 3, 4, 5, 6, 9, 10]

Output: 7

Given: [1,3,4,5]

Output: 0

Solution:

At first glance, we can easily solve this by using linear search. However, this will result in O(n) for time complexity, which is ineffective. Instead, we can use divide and conquer algorithm: every time we split it in half, we consider whether the middle index of the array is still following the logic or not. If yes, we proceed to split the larger half; if no, we trace back and split the smaller half – the process will then run on recursively and result in time complexity equal O(log(n)).

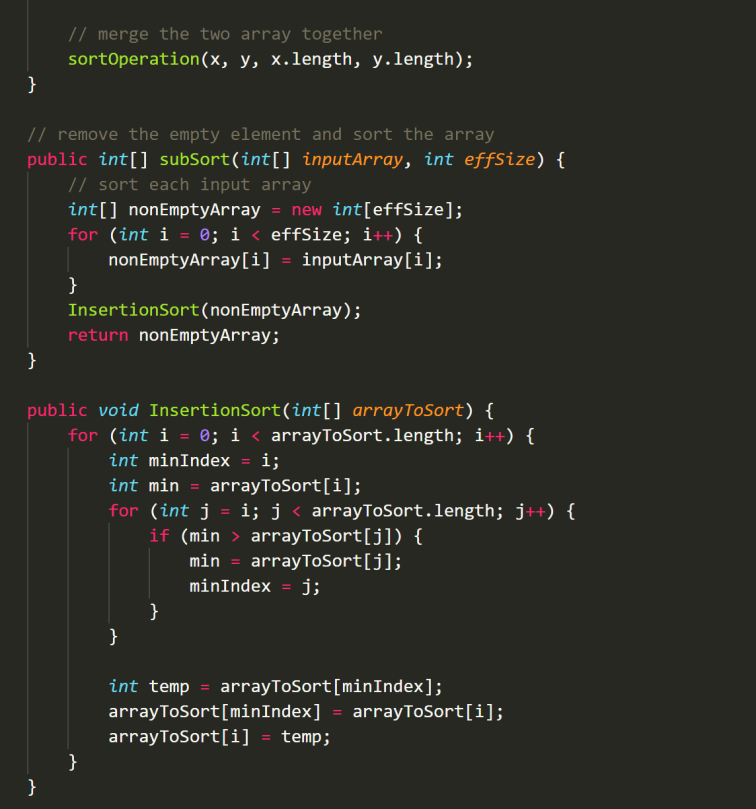

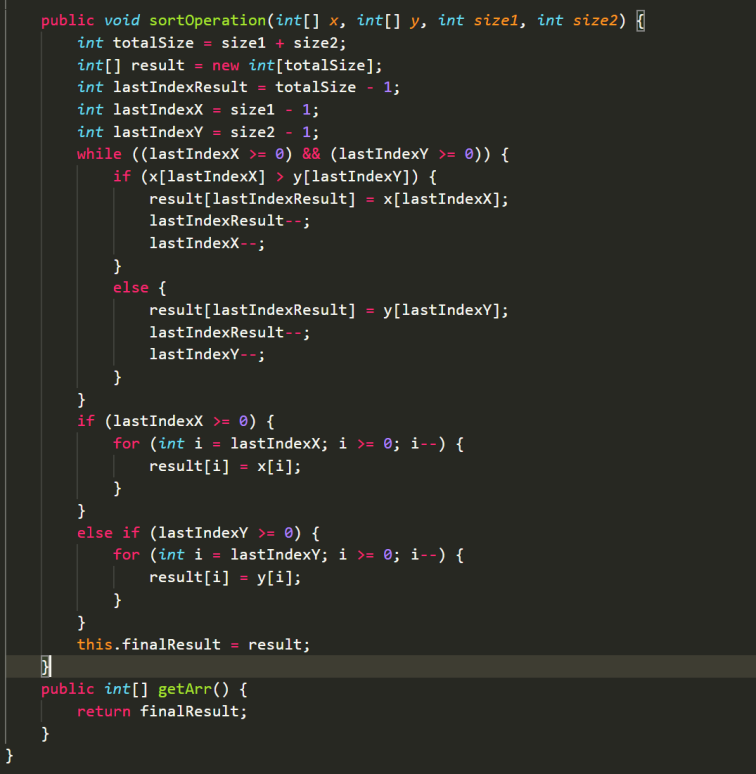

Question 3: Merge 2 unsorted arrays that have empty elements into a single sorted array

For example:

Given: [0, 0 , 2, 5, 4, 1]

[6, 3, 4, 5]

Output: [1, 2, 3, 4, 4, 5, 5, 6]

Solution:

This problem is more complicated compared to the previous two, so it can be broken down into two sub problem: 1) Remove empty element and sort the two individual arrays (I used Insertion Sort) and 2) Merge the two array together.

SOME FURTHER PRACTICE PROBLEMS:

500 Data Structures and Algorithms practice problems and their solutions

Sorting Challenges by Hackerank